Automatic recovery using lvm-autosnap

TL;DR: https://github.com/intentionally-left-nil/lvm-autosnap

Running linux is an adventure. About a year ago, I switched from MacOS to Ubuntu (eww snaps), then Fedora (fine), then Manjaro (yeah that was a mistake) until finally landing on the final boss, Arch linux. Honestly, Arch is great. It does what I want, which is to spend an inordinate amount of time fixing things that used to work.

Snapshots to the rescue

In the face of inevitable failures, the only sane solution is to use snapshots to easily restore to a previous state when things go wrong. Originally, I was using snapper to manage backups using btrfs. It works great, actually and if you’re using btrfs I highly recommend just sticking to an existing tool and sticking with it.

BUT BTRFS. IS. SO. DAMN. SLOW. WHYYYYYY.

I didn’t spend mumble mumble dollars on a fast SSD to throw the performance down the tube using btrfs. I’m already paying a price to have full-disk encryption, I don’t need to make things worse.

Getting 20% read performance (ext4 vs btrfs) just to have snapshots isn’t a tradeoff I wanted to make. So I started down the journey of DIY….

Introducing lvm-autosnap

I was already using lvm-on-luks as the mechanism for encrypting my entire hard drive. LVM _also_ has the ability to create snapshots, so I decided to use that as a foundation. Surprisingly, there isn’t a lot of existing tooling around managing lvm snapshots. Plus, there was one killer feature I wanted which I’m sure doesn’t exist elsewhere.

Auto-restore

That killer feature is auto-restore. As it stands, if you bork your system (which actually happened recently with the 5.19.12 kernel), the typical solution is to boot from your USB recovery image (archiso), dink around with commands, and get everything working again.

That sucks.

What if… the system automatically detected problems and offered up a previous LVM snapshot to restore to? That would be cool (I’m great at marketing, can’t you tell?)

But wait, you say, how could that work if the system is too borked to boot in the first place? (Thanks for being such a great foil). Well, the answer is to go deeper, into the initramfs.

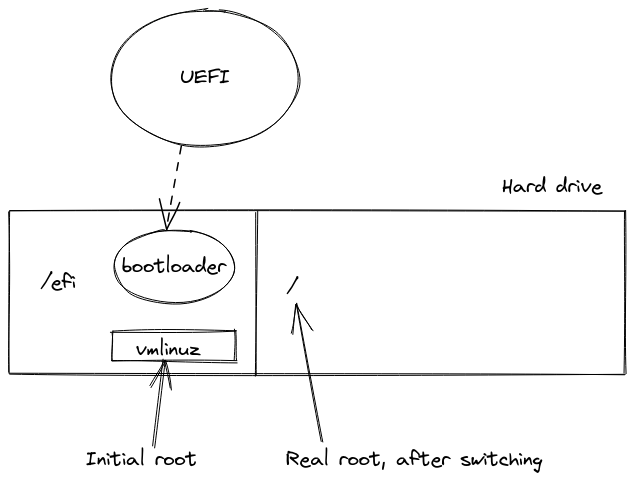

A quick detour: How your computer starts up

- Power turns on, control is handed over to the UEFI

- UEFI chooses a disk to boot off of

- UEFI looks for bootloaders from that disk (using various methods), and executes it

- The bootloader (grub/systemd-boot/etc) loads vmlinuz (the initial image containing the kernel and other blobs) as a read-only file system

- The bootloader transfers control to the kernel, which bootstraps and starts the init userspace process

- The init process continues to boot, figures out where the root (/) partition is and mounts it

- The filesystem is hot-reloaded to switch from the read-only initramfs to your real root

- Booting continues as normal

SO. If we put our code inside of /efi (in the initramfs) rather than in the main hard drive, we can run our code super-early and avoid many problems.

But wait, you say, what about if the kernel won’t boot. (Geez with the questions). You’re right. Then this solution won’t work. However, there’s a simple solution to that problem as well. In the EFI partition, we just need to keep around old bootloaders that we know work, so when updating the kernel, we can boot from the old one on failure. I’ve written systemd-boot-lifeboat to do just that, but you can also solve it by keeping around the linux-lts kernel as well.

How lvm-autosnap works

The idea is pretty simple. Using mkinitcpio, we can add code that runs after LVM initializes and recognizes the drive, but before the volume is mounted. From that point we can create new LVM snapshots, or recover back to old ones. Another benefit of doing backups/restores this early is data integrity. Since none of the volumes have been mounted yet, then taking a backup of different volumes (root, home, var, etc…) should be in a single consistent state. LVM volumes can’t be restored fully when mounted, so running the code this early allows immediate restores.

The other aspect of lvm-autosnap is determining when the computer should be restored. I made a service which runs 30 seconds after boot which marks the snapshot as good. If there are more than 2 consecutive boots that occur without a snapshot marked as good, lvm-autosnap interprets that as a failed environment and prompts to restore.

Challenges

I faced some unique difficulties making this work. Not too many pieces of software run this early, so the documentation is more sparse along with prior art. I’ll go through some of the main challenges I faced and how I solved them

Lack of infrastructure

No grep or awk or bash. The initramfs is a very stripped down environment. My script needs to run on the ash shell (so basically POSIX compatiblity only). Most of my scripting has been using bash, and I really missed some of the nice behaviors it provides. Such as working with arrays. To work around this, I:

- Relied heavily on the lvm binary to do things like filtering, sorting and more via the

--filterand--sortoptions - Avoided dependencies on non-shell functions. I didn’t use a normal .conf file because parsing that without

awkis painful. Instead, I just sourced the .env file directly - Had to manually implement functions like

trim - Needed to be very careful about error handling and exiting the program correctly

- Made sure variables were used properly by adding unique suffixes

Testing

Dealing with data AND dealing with how the computer starts up? Easy way to mess up your computer. There was no way I was going to run the code on my development machine when creating the program. But setting up a VM every time would have taken a long time. Luckily, I found a blog post which described how to automate setting up a VM, and I put it to use to be able to set up arch, with LVM volumes already created, ready to be tested (and broken)

#!/usr/bin/env bash

# inspired from https://blog.stefan-koch.name/2020/05/31/automation-archlinux-qemu-installation

set -eufx

SCRIPT_DIR=$( cd -- "$( dirname -- "${BASH_SOURCE[0]}" )" &> /dev/null && pwd )

archive="${SCRIPT_DIR}/archlinux-bootstrap-2022.09.03-x86_64.tar.gz"

image="${SCRIPT_DIR}/vm.img"

qemu-img create -f raw "$image" 20G

loop=$(losetup --show -f -P "$image")

cleanup () {

umount "$SCRIPT_DIR/vm_mnt/home"

umount "$SCRIPT_DIR/vm_mnt"

lvchange -an testvg || true

losetup -d "$loop"

}

trap cleanup EXIT

if [ -z "$loop" ] ; then

echo "could not create the loop"

exit 1

fi

parted -s "$loop" mklabel msdos

parted -s -a optimal "$loop" mkpart primary 0% 100%

parted -s "$loop" set 1 boot on

parted -s "$loop" set 1 lvm on

pvcreate "${loop}p1"

vgcreate testvg "${loop}p1"

lvcreate -L 5G testvg -n root

lvcreate -L 5G testvg -n home

mkfs.ext4 /dev/testvg/root

mkfs.ext4 /dev/testvg/home

mkdir -p "${SCRIPT_DIR}/vm_mnt"

mount /dev/testvg/root "${SCRIPT_DIR}/vm_mnt"

mkdir -p "${SCRIPT_DIR}/vm_mnt/home"

mkdir -p "${SCRIPT_DIR}/vm_mnt/etc"

mount /dev/testvg/home "${SCRIPT_DIR}/vm_mnt/home"

tar xf "$archive" -C "$SCRIPT_DIR/vm_mnt" --strip-components 1

"$SCRIPT_DIR/vm_mnt/bin/arch-chroot" "$SCRIPT_DIR/vm_mnt" /bin/bash <<'EOF'

set -eufx

ln -sf /usr/share/zoneinfo/US/Pacific /etc/localtime

hwclock --systohc

echo en_US.UTF-8 UTF-8 >> /etc/locale.gen

locale-gen

echo LANG=en_US.UTF-8 > /etc/locale.conf

echo archvm > /etc/hostname

echo -e '127.0.0.1 localhost\n::1 localhost' >> /etc/hosts

echo '/dev/mapper/testvg-root / ext4 defaults 0 0' >> /etc/fstab

echo '/dev/mapper/testvg-home /home ext4 defaults 0 0' >> /etc/fstab

pacman-key --init

pacman-key --populate archlinux

echo 'Server = https://america.mirror.pkgbuild.com/$repo/os/$arch' >> /etc/pacman.d/mirrorlist

pacman -Sy --noconfirm archlinux-keyring

pacman -Syu --noconfirm

pacman -Syu --noconfirm base linux linux-firmware mkinitcpio dhcpcd lvm2 vim grub openssh rsync sudo base-devel cpio

sed -i 's/^HOOKS=.*/HOOKS=(base udev modconf block lvm2 filesystems keyboard fsck)/' /etc/mkinitcpio.conf

echo 'COMPRESSION="cat"' >> /etc/mkinitcpio.conf

linux_version="$(ls /lib/modules/ | sort -V | tail -n 1)"

mkinitcpio -k "$linux_version" -P

grub-install --target=i386-pc /dev/loop0

sed -i 's/^GRUB_PRELOAD_MODULES=.*/GRUB_PRELOAD_MODULES="part_gpt part_msdos lvm"/' /etc/default/grub

sed -i 's/^GRUB_CMDLINE_LINUX_DEFAULT=.*/GRUB_CMDLINE_LINUX_DEFAULT="rd.log=all"/' /etc/default/grub

grub-mkconfig -o /boot/grub/grub.cfg

echo root:root | chpasswd

useradd -m me

echo me:me | chpasswd

echo "PermitRootLogin yes" >> /etc/ssh/sshd_config

echo "me ALL=(ALL) ALL" >> /etc/sudoers

systemctl enable dhcpcd.service

systemctl enable sshd.service

EOFMaking the code run in initramfs

First, I needed to even get my code into the initramfs in the first place. On arch, that’s done with the mkinitcpio infrastructure. First, I added the following shell script to /usr/lib/initcpio/install/lvm-autosnap

#!/usr/bin/bash

build() {

# mkinitcpio runs everything in the same shell O_o

# To prevent breaking other scripts (e.g. when running set -f)

# run our code in a subshell, then propagate the error upwards

(

set -uf

export SCRIPT_PATH=/usr/share/lvm-autosnap

export LVM_SUPPRESS_FD_WARNINGS=1

# shellcheck source=config.sh

. "$SCRIPT_PATH/config.sh"

# shellcheck source=core.sh

. "$SCRIPT_PATH/core.sh"

# Validate the .env file is valid, otherwise fail out

config_set_defaults

load_config_from_env

validate_config

if [ -z "$VALIDATE_CONFIG_RET" ] ; then

exit 1

fi

) || exit "$?"

# Once we've validated lvm-autosnap.env

# the remaining code _must_ be run in the initial shell,

# or nothing gets actually added to the initramfs

add_binary /usr/bin/lvm-autosnap

# Add our scripts

add_file /etc/lvm-autosnap.env

add_full_dir /usr/share/lvm-autosnap

if command -v add_systemd_unit >/dev/null; then

add_systemd_unit 'lvm-autosnap-initrd.service'

add_symlink "/usr/lib/systemd/system/initrd-root-fs.target.wants/lvm-autosnap-initrd.service" "/usr/lib/systemd/system/lvm-autosnap-initrd.service"

else

add_runscript

fi

}Basically, when you add something to the HOOKS section of mkinitcpio.conf, the infra. looks to see if a corresponding shell file lives in the install folder and runs it when generating the initramfs. In my case, I first validate that /etc/lvm-autosnap.env is correct, and then add the following to the initramfs:

- /usr/bin/lvm-autosnap: My CLI entrypoint for everything to do with lvm-autosnap

- /usr/share/lvm-autosnap/*: The folder where the actual implementation of lvm-autosnap lives (all the shell scripts, etc)

- /etc/lvm-autosnap.env: The configuration for which drives to backup, etc.

Now that the files are present, the last thing is to actually execute them. Here, it depends on how your initramfs is configured. Arch uses busybox by default as the initrd, but you can also configure it to use systemd instead. The former is a pretty easy system to understand. Similar to the install hook, I added a file to /usr/lib/initcpio/hooks/lvm-autosnap and this shell script is run when the computer starts up, in the same order as the HOOKS:

#! /usr/bin/ash

# shellcheck shell=dash

# N.B this is run as subshell using () rather than {} because

# 1) We don't want to affect the rest of the runtime hooks and

# 2) We never want to take down init if our script fails

run_hook() (

/usr/bin/lvm-autosnap initrd_main

)This just calls the CLI to run the code and we’re off to the races.

Running with systemd

Busybox/sysV is a pretty easy system to use, but it has some drawbacks. Everything is run serially and recovery is not as nuanced. Systemd was designed to solve many of these challenges, and it can apply the same techniques to early-userspace (in initramfs) as well. The flow chart on the systemd docs is invaluable for understanding what happens in this case.

Anyways, when using systemd, the above runhook is ignored and not used at all. Instead, you need to make a service, just like you would do for normal systemd. Then, the service needs to be installed in the initramfs, and a target in the initramfs needs to want it. I’m not sure if I’m doing it exactly right, but I solved this with the following couple lines of code in the install hook (from above)

add_systemd_unit 'lvm-autosnap-initrd.service'

add_symlink "/usr/lib/systemd/system/initrd-root-fs.target.wants/lvm-autosnap-initrd.service" "/usr/lib/systemd/system/lvm-autosnap-initrd.service"This basically just adds lvm-autosnap-initrd.service to the initramfs, along with symlinking it to initrd-root-fs.target.wants (which is how systemd ties services together).

The actual service isn’t much code, but it took awhile to get it working correctly:

[Unit]

Description="Run the core lvm-autosnap logic to take manage snapshots during boot"

DefaultDependencies=no

Before=sysroot.mount

After=initrd-root-device.target

[Service]

Type=oneshot

ExecStart=/usr/bin/lvm-autosnap initrd_main

RemainAfterExit=yes

Restart=no

StandardInput=tty

StandardOutput=ttyWhen running this early, it’s essential to set DefaultDependencies=no for it to work properly. Then, we want the code to run after the hard drive is ready, but before it’s mounted. Hence the Before/After. You can see in the bootup guide where this fits in. Importantly this means that sysroot.mount won’t execute until this service runs (and completes since the type is oneshot). This prevents the system from booting until lvm-autosnap completes. This is a good thing because we can create the snapshot without worrying about the system continuing to boot in the mean time. As mentioned, type=oneshot is needed to prevent execution, and RemainAfterExit solves a headscratching case where the service would otherwise restart once switching to the real root.

Writing good shell code

This is pretty new for me. Most of my shell scripts have been relatively small and were written as very procedural things. For something larger like this, I wanted to break things into files, and have good patterns. I ended up just sourcing files at runtime to merge everything together, rather than having a build script to generate a mega-binary.

Also, I needed to figure out how to pass data back and forth to my functions. I’ve known from previous experience that using stdout is fraught because it’s really important to prevent external programs from echoing stuff. Plus you can’t print messages to the terminal that way. So, what I decided was that each function would have a global variable FUNCTION_NAME_RET where it would set the output. Calling code would be responsible to copy that variable to a local copy (otherwise it could be subsequently overwritten). It’s verbose but it gets the job done.

I split out the code that calls lvm into wrapper calls. That way it’s easy to mock the results from my unit tests (using bats).

Lastly, a lot of code involves parsing arrays of data. I needed to be really careful with the internal field separator (IFS) to allow me to split things apart properly, without affecting downstream code.

Results

I’m quite happy with how lvm-autosnap turned out. Lvm-autosnap comes in at less than 300 lines of code:

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

Markdown 2 118 0 218

Bourne Shell 3 20 10 155

BASH 3 37 26 126

-------------------------------------------------------------------------------

TOTAL 8 175 36 499

-------------------------------------------------------------------------------and it works completely on autopilot. Everytime I boot the computer, it evicts the oldest snapshot, and creates new snapshots for my volumes. If the computer fails to boot, it automatically asks me to recover to a known-good snapshot. I learned a whole lot about how the computer starts up and interacting with it at such an early point-in-time